Private AI Governance Has an Incentives Problem

On Dean Ball's proposal, lessons from 2008, and better alternatives

Update: Bullish Lemon is now AI Policy Takes! I have consulted with the powers-that-be (Tim Lee, Sonnet 4.5, etc.), and I think this makes sense for the direction the blog is (has been, will be) heading. Alright, back to the post.

Balancing between safety and progress is the concern of any regulation, and nowhere do the stakes seem as high as with AI governance. The upside? Societal transformation ushering in luxury space communism, some claim. The downside? Literal human extinction, others lament. Striking a balance between the two seems pretty important.

In the midst of this discourse, Dean Ball offers an interesting, thoughtful proposal: private governance.

The basic intuition is that maybe public sector lawyers shouldn’t be the primary regulators of a technology that is actively creating new mathematical knowledge.

The actual proposal is to replace “command and control” governance with a good ol’ competitive market. In this market, private regulators compete to offer voluntary certification to AI developers, who receive safe harbor from tort liability in exchange for getting certified. This incentivizes institutional innovation while maintaining democratic oversight, moving us towards a dynamic system more suitable for the upcoming era of AI.

As a perennial markets fan, I find the proposal genuinely compelling. But I think it fundamentally misunderstands the incentives that define a regulatory market—one where the consumers are the regulated entities themselves. As proposed, private governance would generate a race to the bottom that the government (and society) will inevitably lose, even with the proposed mitigation mechanisms. The situation has striking parallels to credit rating agencies in the 2008 financial crisis. Nonetheless, there are promising alternative approaches that retain private governance’s strengths while addressing these flaws.

Private Governance Explained

The basic structure of private governance is as follows1:

First, legislators authorize a government commission to license private AI regulatory organizations. To get licensed, a private regulator must demonstrate technical and legal credibility, plus independence from industry.

Next, AI developers can voluntarily opt in to certification from a licensed private regulator. Certification verifies that the developer meets the technical standards for safety and security published by the private regulator. In exchange for certification, a developer receives safe harbor from misuse-related tort liability, which Ball believes is one of the most severe risks to AI development.

Regarding enforcement, the government maintains oversight by periodically auditing and re-licensing private regulatory organizations, who in turn periodically audit and re-certify developers. Private regulators can revoke a developer’s certification for non-compliance.

The proposal also includes a key safeguard against a “race to the bottom”: if a private regulator’s negligence (e.g., ignoring developer non-compliance) leads to harm, the government can revoke its license, which eliminates tort safe harbor for all entities certified by that regulator. This incentivizes developers to avoid flocking to the most lax regulator, since one company’s poor behavior threatens everyone’s legal protections.

The proposal’s benefits are compelling in theory: market forces drive regulatory innovation, private organizations bring technical competence, and government licensing maintains democratic oversight.

Regulatory Markets’ Misaligned Incentives

Unfortunately, there’s a fundamental problem with applying market logic to regulation here. To clarify the issue, let’s lay out the proposal’s underlying reasoning.

Private governance relies on the following simple logic: competition drives innovation, and innovation produces better regulation. This extends from the similarly simple general economic argument: competition drives innovation, innovation produces better goods

However, this reasoning collapses when we ask: better for whom? Consumer preferences in a regulatory market differ fundamentally from consumer preferences in typical markets. In most markets, consumers are individuals and households. When you (yep, you) go to buy something, you’re looking for something affordable, useful, and harmless (among other things)—roughly what society wants too. Consumer preferences broadly align with social welfare, and because innovation is driven by consumer preferences, innovation generally promotes social welfare. But in a regulatory market, the “consumers” would be AI developers. Their preferences are misaligned with social welfare, which is why regulation is even necessary in the first place.

To be specific, AI developers want minimally burdensome regulation that still provides (under Ball’s proposal) legal safe harbor. On the other hand, society wants minimally burdensome regulation that still effectively prevents harm.

Critically, attaining legal safe harbor does not necessarily require effectively preventing harm. In fact, achieving the former but not the latter is the ideal outcome for developers. The regulatory costs developers actually face make this clear.

Sure, there are costs associated with regulatory implementation: initial certification, compliance documentation, audit preparation, etc.

But the real big hitters are development delays from regulation. With rapid development cycles and major first-mover advantages, getting unexpectedly stuck in compliance hell while competitors steam ahead means losing out on potentially billions in expectation. Implementation is annoying, but pocket change in comparison—that’s why many developers are actively lobbying for federal-level regulations that impose uniformity and decrease uncertainty.

Developers will do almost anything to avoid these delays. And therein lies the fundamental tension: any effective regulation necessitates the risk of development delays. Catastrophic risk scenarios often specifically revolve around the rapid development of dual-use capabilities, the downside of which is beyond society’s ability to handle, and the upside of which is...insanely profitable. That’s why regulation is needed in the first place—so firms don’t race to sacrifice society at the altar of profit maximization.

But in a regulatory market, developers’ preferences drive innovation, and their priorities are: 1) attain legal safe harbor, and 2) prevent development delays. Safety is a tertiary concern, at best.

Why the Safeguard Won’t Work

The proposed mechanism against a “race to the bottom” involves punishing all developers certified by a negligent private regulator. This discourages monopolization by the most lenient regulator, and it incentivizes developers to monitor each other for violations.

I agree that this mechanism prevents obvious negligence, such as the “handshake” deal between a private regulator and a developer used in the paper’s example.

But in practice, the race will happen at the methodological level, not through blatant non-compliance. Driven by developers’ misaligned incentives, private regulators will leverage their technical expertise to develop methods that appear impeccable to the government commission and courts, but preclude costly development delays (and thus, actual efficacy).

Specifically, I expect to see complex frameworks with predictable loopholes:

Monte Carlo simulations run on flawed assumptions, allowing developers to prove “reduced risk” by satisfying easily gamed benchmarks.

Multi-stage review processes checking off scores of technical criteria “critically related” to safety, while silently avoiding thornier concerns.

“Mathematical proofs” of “formal verification” that are impossible for the average (or even technical) regulator to actually evaluate.

I expect we’ll see regulatory theater that primarily functions as a legal buttress:

Page upon page of justification for each process.

Extensive paper trails documenting compliance.

Expert input recorded at every step.

But at the end, none of this will serve to actually protect people against critical harms. Even if incidents occur, the private regulator can point to their technically complex and diligently documented methods as a strong legal defense, arguing that incidents were unforeseeable edge cases beyond any reasonable standard. The burden shifts to the government to prove that these apparently sophisticated methods were actually negligent.2

In fact, despite Ball’s enthusiasm for using AI to govern AI, this innovation is unlikely to emerge precisely because it would impose major regulatory uncertainty.

Models are still very much black boxes—when an AI system flags a model, neither developer nor regulator may understand why. Using AI here risks getting stuck in expensive trial-and-error loops trying to fix opaque problems. Rational developers will strongly prefer predictable (and gameable) processes over stochastic systems, so private regulators will avoid AI-based methods to attract customers.

Note that this critique doesn’t stem from general market skepticism. In fact, it’s precisely because I believe in market efficiency that I think a regulatory market would divine developers’ actual rather than idealized preferences (get safe harbor and avoid development delays), and innovate to maximally satisfy them with the minimal required resources (prioritize profitably misleading processes over costly but legitimate safety mechanisms).

Historical Example: Credit Rating Agencies and the 2008 Financial Crisis

Unconvinced? Let’s examine a historical example with striking parallels: the role of credit rating agencies (CRAs) in the 2008 financial crisis.

CRAs are companies that rate the creditworthiness of debt securities, informing buyers of the risk associated with certain investments. They were first established in the early 20th century U.S. to mitigate investor uncertainty around railroad bonds, which had created the largest bond market seen up to that point. In 1975, the SEC began designating certain CRAs as Nationally-Recognized Statistical Ratings Organizations (NRSROs), which meant that their ratings would apply to the enforcement of the net capital rule (among other things), codifying the legitimacy of their ratings.

Around the 1970s, CRAs also began switching from a “subscriber-paid” to “issuer-paid” business model. Rather than investors paying to access CRA ratings, securities issuers paid to get rated. A big reason for this change was the invention of the copier machine: with copy & fax, it became trivially easy for investors to share ratings information, making the old subscriber-paid model unprofitable.

However, as was later recognized, the problem with the new issuer-paid model was that it created a major conflict of interest. Issuers wanted to receive inflated ratings since it made their products appear safer, increasing revenues. Since issuers could “shop around” for CRAs, this incentivized CRAs to inflate ratings. This was particularly the case in the structured products market, where a small number of issuers accounted for the vast majority of rated products (and so their choices greatly affected CRA market share and profits).

One of these structured products was mortgage-backed securities (uh-oh).

During the housing boom, CRAs gave top-tier AAA ratings to many of these securities, the issuance of which increased tenfold from 2000 to 2006, and the ratings of which grew to comprise over 50% of Moody’s (a top CRA/NRSRO) total revenue. As we now know, these CRAs fundamentally failed in their ratings of these risky mortgage products, the majority of which were later downgraded to “junk” bond status as the housing bubble burst, ultimately significantly exacerbating overall financial collapse.

Investigations revealed that inflated ratings were caused by factors including:

Monte Carlo simulations with inputs derived from unrepresentative data.

Reverse-engineering of CRA models by issuers seeking favorable ratings.

Inaccurate assumptions about correlations between regional defaults.

It was hard to spot these problematic details hidden within such complex models, and they remained undetected until it was too late.

Some argue that technical failures played a more significant role than this conflict of interest, but even these critics concede that the latter played a crucial secondary role in leading CRAs to neglect rigorous evaluation of their financial models, allowing technical issues to persist undiscovered for an unreasonable amount of time.

In any case, this counterpoint doesn’t weaken, but actually strengthens my argument. It demonstrates that misaligned incentives alone—not necessarily intentional deception—are sufficient to cause catastrophic modeling failures. Due to the complexity of the issue, constant vigilance is necessary to actually mitigate risk, but without proper incentives, the profit motive alone is simply inadequate to motivate this.

Alternative Approaches

I want to reiterate that there’s much in Ball’s proposal worth preserving. Harnessing market forces to drive regulatory innovation remains compelling. I also think criticism should always offer viable alternatives.

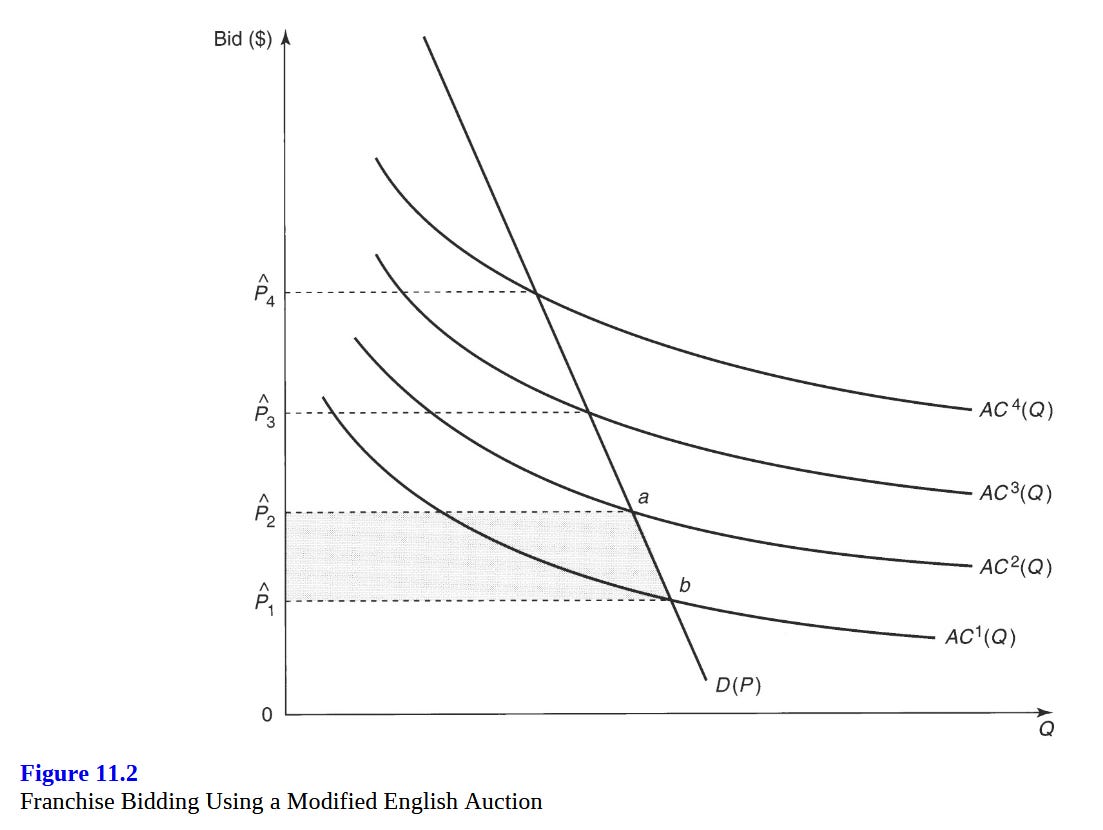

A promising approach might be to retain the competitive aspect of private governance, but change where and when this competition occurs. One way to achieve this could be through a franchise system, where a single private regulator is contracted by government through a bidding process. Here, competition occurs “for the field” rather than “within the field.”

While initially designed as an alternative way to regulate natural monopolies, franchising is generally advantageous in situations where outright privatization would be difficult, or where traditional regulation would be costly.

Applied to AI regulation, the government could auction the right to be the private AI regulator for a fixed period—say, 2 years. Competition happens during the bidding phase, not afterward. Potential regulators compete on criteria the government prioritizes: innovativeness and safety, independence from industry and implementation costs.

This alternative addresses the proposal’s key issues in several ways:

First, competition will focus on what society values: social welfare. During the auction, the government can weigh safety efficacy heavily in its evaluation criteria. Then, bidders compete to prove their approach will actually prevent harm, not just provide legal cover, because that’s what the consumer (i.e., the government) is buying.

Second, it enables a more feasible and effective system of oversight. Rather than monitoring the complex technologies of multiple regulators indefinitely, the government only needs to: 1) carefully evaluate a few serious bidders initially, and 2) monitor one entity during its franchise period. This is far more realistic given limited government technical capacity, and it makes deception or negligence much harder.

Third, the bidding process exposes methodological weaknesses. During the bidding process, competitors have strong incentives to expose flaws in rivals’ proposed approaches, similar to red-teaming. A competitor claiming their models are more rigorous can and will point out exactly why their rival’s assumptions are flawed. Critically, this scrutiny happens before any harm occurs, not after.

Beyond franchise bidding, another alternative approach would be to establish an advance market commitment (AMC): a commitment to purchase or subsidize a product if successfully developed. Historically, these have been offered by governments and non-profits for the development of vaccines or other neglected medical treatments, but the general idea can be applied to incentivize any desired innovation.

As mentioned, Dean Ball seems excited about the possibility of using AI to govern AI. While this specific application certainly doesn’t encompass the entirety (or potentially even a small fraction) of the space of valuable regulatory innovations, it still seems like worthwhile technology to single out for development incentives.

In general, AMCs completely avoid the problematic incentive structure of a regulatory market. Instead of developers paying private regulators for certification, which creates a conflict of interest, the government pays experts to solve society’s hardest safety problems. Researchers and organizations compete to deliver what the government specifies, not what helps developers’ bottom line.

Of course, the best solution might be a combination of these two alternative approaches, or something else entirely. Regulation is an underratedly complex and fascinating topic, and there are plenty of historical examples, economic studies, and legal analyses to draw from—perhaps material for another post.

The stakes are too high to get this wrong. As AI’s capabilities accelerate, we need governance structures that actually align innovation with social welfare, not just the appearance of it. Private governance as currently proposed would likely give us the latter while claiming the former. But luckily, we can do better.

Full outline of the proposal (p. 26):

A legislature authorizes a government commission to license private AI standards-setting and regulatory organizations. These licenses are granted to organizations with technical and legal credibility, and with demonstrated independence from industry.

AI developers, in turn, can opt in to receiving certifications from those private bodies.

The certifications verify that an AI developer meets technical standards for security and safety published by the private body. The private body periodically (once per year) conducts audits of each developer to ensure that they are, in fact, meeting the standards.

In exchange for being certified, AI developers receive safe harbor from all tort liability related to misuse by others that results in tortious harm.

The authorizing government body periodically audits and re-licenses each private regulatory body.

If an AI developer behaves in a way that would legally qualify as reckless, deceitful, or grossly negligent, the safe harbor does not apply.

The private governance body can revoke an AI developer’s safe harbor protections for non-compliance.

The authorizing government body has the power to revoke a private regulator’s license if they are found to have behaved negligently (for example, ignoring instances of developer non-compliance).

More fundamentally, incident-based enforcement is impractical for catastrophic risks from AI systems. Private regulators can easily argue that incidents result from popularity and market share rather than regulatory failure. OpenAI has roughly several times more brand recognition than its competitors—incidents involving their models could be attributed to usage volume rather than inadequate oversight. How many catastrophic incidents killing hundreds of people must occur before government can prove a regulator is irresponsible? An incident-based system would suffer from confounders (usage volume, model differences, external factors), and by the time evidence for a regulator’s causal role accumulates, massive harm has already occurred.